Data Cleaning Techniques: Unlock the Power of Web-Scraped Data

- Ashwin Joseph

- May 25, 2023

- 8 min read

Updated: Jan 8

In today's rapidly evolving business landscape, data is becoming increasingly crucial for all types of enterprises. Through data analysis, businesses can optimize their performance, uncovering better ways to operate and thrive. It's safe to say that data is the driving force shaping our world.

Among the various methods of data acquisition, web scraping stands out. However, the data obtained through scraping often turns out to be messy, unclean, or unstructured. This implies the presence of faults such as duplicate records, inconsistency, and incomplete data.

To extract the most valuable insights from data analysis, it is imperative to address this issue by cleaning the data. As the saying goes, "garbage in, garbage out," using unclean data for analysis can have detrimental effects on a business. Therefore, data cleaning takes center stage as a critical step before embarking on data analysis—a process that involves removing faults from unclean data and transforming it into a clean, analysis-ready format.

In this blog, we will delve into the world of scraped data, identifying common faults and equipping ourselves with invaluable data-cleaning techniques to rectify these issues. To illustrate the practical application of these techniques, we will focus on air fryer product data that we have meticulously scraped from Amazon.

Data Exploration

Data exploration is an initial step of data analysis used to explore and visualize data to uncover insights, identify patterns and errors in the data, and understand the characteristics of the data. It's during this step that we find the errors present in a dataset

There are many pandas functions and methods to explore data like head(), tail(), describe(). These functions help us to manually inspect and visualize the data. But a major drawback of these functions is they are time-consuming. In order to overcome this drawback, there is a Python library named Pandas Profiling which generates detailed reports and visualization of the dataset with just a few lines of code. In this blog, we will first explore how to use Pandas Profiling to generate a detailed report of the data and then compare the reports of data before and after applying a few data cleaning techniques.

Pandas Profiling is a Python library that can be installed easily using pip. Once installed, the following code will generate a detailed report of the data in HTML format which can then be viewed in any web browser.

import pandas as pd

from pandas_profiling import ProfileReport

#Read dataset into the variable data

data = pd.read_csv("/content/drive/MyDrive/Air-Fryer-Data.csv")

#Generating report

original_report = ProfileReport(data, title='Original Data')

#Saving the report

original_report.to_file("original_report.html")

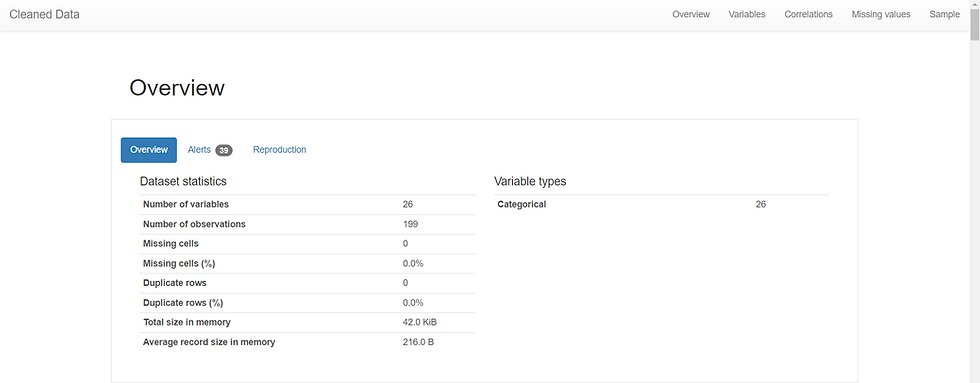

This is an overview of the detailed report. As we can see, it contains three sections - Overview, Alerts, and Reproduction. In the overview section, we can see that our dataset contains duplicate rows. This is an error that needs to be rectified before data analysis. The process for removing duplicate rows from data is known as data deduplication, and the code for the same is shown in the next section of the blog.

This is an overview of the report of the data after applying data deduplication. As we can see in the overview section, there are now no duplicate rows present in our data. Similarly, we can navigate through other sections of the report and identify patterns or errors present in the data, uncover insights, and get a basic understanding of the data. Now let's move on to see some more data-cleaning techniques.

Data Cleaning Techniques

Data Deduplication

The first thing we need to do when we get any data is to check for duplicate records. Duplicate records lead to inaccurate analysis as they overrepresent certain data points, produce confusing data patterns, and mask important information. They also lead to the wastage of storage resources as the same data will be stored many times. Therefore, the first and foremost step in data cleaning is data deduplication which is the removal of duplicate records from the data.

In our data, the column named ‘Product Link’ contains the link to each product on the Amazon page. This column uniquely identifies each record and hence can be used to identify and eliminate duplicates. The code for the same is given below:

data.drop_duplicates(subset=["Product Link"], inplace=True)We first read and stored our data in the variable ‘data’ and then used the drop_duplicates function, which is a predefined pandas function. It takes as a parameter the name of the column which should be used to check for duplicates. It compares and removes duplicate records from the data.

URL Normalization

A URL is an address of a particular product on the internet. The URL we extract while scraping involves unnecessary or redundant components such as query parameters, trailing stash, etc. The data-cleaning process which removes such components from the URL while retaining the essential parts that uniquely identify the product is called URL normalization. This simplifies the URL by reducing their length, makes them more readable, and improves the accuracy of processing tasks that involve urls.

An example of URL normalization is shown below. The same code can be put in a loop, and all the urls present in the dataset can be normalized.

# Create a DataFrame with the URL as a single row

url_df = pd.DataFrame({'url':['https://www.amazon.in/Lifelong-LLHFD322-Digital-functions-Technology/dp/B0B242W2WZ/ref=sr_1_67?crid=3EWKFR0AZLVMY&keywords=air+fryer&qid=1676476027&sprefix=air+fryer%2Caps%2C3150&sr=8-67']})

# Create a DataFrame with the URL as a single row

new_url = url_df['url'].str.extract(r'^(.+?/dp/[\w]+/)')Here, we first create a pandas dataframe with the extracted url as a single row. Then we extract the required URL from this using pandas string manipulation functions. The new simplified URL will be shown below.

https://www.amazon.in/Lifelong-LLHFD322-Digital-functions-Technology/dp/B0B242W2WZ/Whitespace Trimming

While scanning through our dataset, we find that the product names are accompanied by leading and trailing whitespaces. These whitespaces may cause issues when performing data analysis as they may affect string comparison, matching and grouping. Therefore, removing these unwanted whitespaces is a crucial step to make the data consistent and avoid errors during data analysis.

The below code snippet shows how to remove the leading and trailing whitespaces from the product name. The same code can be put in a loop and performed for all the product names in the dataset.

input_str = " Lifelong LLHFD322 Digital Air Fryer 3.5L 1400W Fry, Grill, Bake, Roast & Reheat with 10 Pre-set functions | Touch Control | Rapid Air Technology "

# Create a DataFrame with the input string as a single row

df = pd.DataFrame([input_str], columns=["input_str"])

# Remove leading and trailing white spaces using Pandas string manipulation functions

df["output_str"] = df["input_str"].str.strip()While performing an analysis of the product names, we found that some products don't have a product name. For such products, their remaining data is also not available. When we inspected the page of such products, we came to know that those products were ‘Out of Stock’ when we scraped the data, and that’s why their data is not available. To get the details of ‘Out of Stock’ products, we need to perform scraping at regular intervals, and when the product is ‘In stock’ its data will be extracted.

Numeric Formatting

When numeric values are scraped, they are scrapped in the string format and contain commas and decimal values. This data cannot be used for numerical calculations and statistical analysis as they may be inconsistent and not in the integer format. Therefore, removing commas and decimal values is an important step to make the data consistent and convert it into a format suitable for numerical analysis of data.

The code for removing commas and decimal points is given below. The same code can be put in a loop and applied to all the numerical values in a column.

input_str = "8,000.67"

# Remove comma and decimal point from input string

output_str = input_str.replace(",", "").split(".")[0]

# Convert output string to an integer

output_int = int(output_str)While looking at our dataset, we can see that numeric formatting should be applied to the columns ‘Number of Ratings’, ‘Original Price’, and ‘Offer Price’. We should not apply numeric formatting to ‘Star Rating’ as under this column, the decimal points also contribute to the analysis.

Unit of Measurement Standardization

Some data, like weight or temperature, can be represented using different units of measurement. If these data are represented in different units in the dataset, it makes the data inconsistent and cannot be used for analysis. Therefore, all the measurements should be converted to a common unit of measurement to ensure consistency and make comparability easier.

While looking at our dataset, we can see that unit of measurement standardization should be applied to the columns “min_temperature,” “item_weight,” and “capacity.” The code to standardize units in all these columns is given below.

input_temp = "40 Degrees Fahrenheit"

#Extract the temperature unit from input

temp_unit = input_temp.split()[2]

if temp_unit == "Fahrenheit":

#Extract the temperature from the unit

temp_fahrenheit = int(input_temp.split()[0])

#Convert from Fahrenheit to Celsius

temp_celsius = int((temp_fahrenheit - 32) * 5/9)

else:

temp_celsius = int(input_temp.split()[0])In the column ‘min_temperature,’ temperatures are given in two different units and in string format. Therefore, after applying unit of measurement standardization, we need to apply numeric formatting, too for ease of analysis.

input_capacity = "40 litres"

#Extract the capacity unit

capacity_unit = input_capacity.split()[1]

if capacity_unit == "Quarts":

#Extract the capacity in Quarts

capacity_quarts = int(input_capacity.split()[0])

#Convert Quarts to litres

capacity_litres = int(capacity_quarts * 0.94)

else:

capacity_litres = int(input_capacity.split()[0])

print(capacity_litres)In the column ‘capacity’, the same logic for conversion is applied. The difference is only in the conversion formula. Here, all the values are converted to liters.

input_weight = '1 kg 500 g'

#Function to convert weight to kilogram

def convert_to_kg(weight):

unit = weight.split()[1]

extracted_weight = int(weight.split()[0])

if unit == 'kg':

if(weight.split()[3] == 'g'):

weight_kg = extracted_weight + int(weight.split()[2])*0.001

else:

weight_kg = extracted_weight

elif(unit == 'g'):

weight_kg = extracted_weight*0.001

elif(unit == 'Kilograms'):

weight_kg = extracted_weight

elif(unit == 'Grams'):

weight_kg = extracted_weight*0.001

elif(unit == 'Pounds'):

weight_kg = extracted_weight*0.45

return weight_kg

output_weight = convert_to_kg(input_weight)In the column ‘item_weight’, the same logic for conversion is applied. The difference is only in the conversion formula. Here, all the values are converted to kilograms.

Now that all the units of measurements are standardized, we can remove the unit from each data entry and change the column names to ‘min_temperature (in Celsius)’, ‘capacity (in liters)’, and ‘item_weight (in kg).’

Column Merging

In the dataset, there might be sometimes two columns present that have different names but represent the same information. It increases the data complexity and degrades the data quality. Therefore, such redundant or duplicate columns should be removed before data analysis. This process is known as column merging.

In our dataset, there are two columns named ‘wattage’ and ‘output_wattage’, which represent the same information. But we can see that the column ‘wattage’ does not contain any value. Therefore, it can be removed. The code for the process is given below. The same can be applied to remove any column from the dataset.

data = data.drop('wattage',axis=1)Column Extraction

This data-cleaning process is the direct opposite of the previous one. Here, instead of deleting a column, we split a column into multiple columns. Hence it is also known as column splitting. Sometimes a single column may contain many pieces of information. Splitting this information into different columns will make it easier to analyze and visualize.

In our dataset, the column named ‘Best Sellers Rank’ contains two ranks of the product - one is its rank under the Home and Kitchen category, and the other one is its rank under the Air Fryer category. We will split this column into two columns, named Home and Kitchen Rank and Air Fryer Rank.

input_str = "#321,112 in Home & Kitchen (See Top 100 in Home & Kitchen) #72 in Air Fryers"

# Use regular expressions to extract the rankings

home_kitchen_rank = re.findall(r'\#(\d+,\d+)', input_str)[0]

air_fryers_rank = re.findall(r'\#(\d+)', input_str)[1]

# Create a pandas DataFrame with the extracted rankings

df = pd.DataFrame({'Home & Kitchen Rank': home_kitchen_rank,

'Air Fryers Rank': air_fryers_rank},

index=[0])The above code can be put in a loop to apply to all the products.

Conclusion

This blog has covered various errors that can occur in scraped data and shared techniques to tackle them. It's crucial to understand and address these errors because they have a significant impact on our analysis. When it comes to data analysis, our primary goal is to gain insights and learn from the data. And guess what? Accurate learning is only possible when our data is accurate too. That's why data cleaning takes the spotlight as the most crucial step in the whole data analysis process.

To ensure your data is free from errors and ready for analysis, consider leveraging the expertise of Datahut's web scraping services. We specialize in providing clean, ready-to-use data that empowers your analytical efforts. Take the next step towards maximizing the potential of your data by engaging Datahut's web scraping services today.